GPT-5 is here: Five Really Simple Things AI Still Can't Do

Beyond the old "creativity" and "empathy" complaints. What we aren't comfortable handling AI just yet

The new and improved GPT-5 model was launched recently.

Two years after the previous generation, it was surprisingly free of any major features. The launch, however, wasn’t free of problems.

The Altman team had good intentions, trying to unify its various models. But the forced transition disrupted workflows and rubbed many users the wrong way.

It can be argued, though, that what GPT and other models need are not new features, but rather improved integration and optimisation for existing use cases and workflows.

In simple words, the language part of the Large Language Model (LLM) can enable many features that users aren’t able to take advantage of.

This language part acts as a bridge between technology and humans in a way not possible before, and that can save a lot of human time and effort, even without shiny new upgrades.

That’s the goal here— Improved intuitivity. The machine works for the person rather than the other way around. Spending less time getting around the device language to get things done.

AI might always have classic problems like a lack of creativity and empathy that make them unable to do some things for us. But there are many simple things they can do, just aren’t programmed to yet.

Focus on more than one problem (Contextual AI)

If I ask you one day why my back hurts then at another day ask what are some good chairs, you might immediately figure out why I am asking the latter question.

But in case of chatbots, unless you specifically ask these questions in the same chat, they likely won't pick up this clue.

A server processing brain isn't connecting the dots like the pattern-finding human brains can. It fails to make the connection that a detail from one chat can be useful for another.

For instance, try this— If ChatGPT knows a lot about you, ask it “What should I eat today”.

GPT likely won’t right away pull from any health or diet-related information you have already given it and would instead suggest generic options, as it did for me.

However, if you then ask, “Tell me based on what you know about me,” it will give a tailored response.

To be fair, there are definitely valid reasons why you won’t want old chats used as references.

But the usefulness of a model with a tendency to refer to older chats, be it as a toggle on a per-chat basis, can’t be ignored.

Being Independent of Devices (Accessible AI)

LLM models are the closest technology has come to feeling more like a friend than a tool. The many people marrying AI chatbots would likely agree.

Regardless of the beliefs on AI, it is admittedly being used by millions daily. That makes how they are accessing it important.

Imagine going out for a walk one day. No agenda. Just some solitude. Enjoy nature and give your thoughts some breathing room.

On this little journey, you might not want to take anything except an AI friend along. Alone but not cut off from the internet.

This was certainly one of the use cases of the Humane AI Pin and the Rabbit R1.

After their disastrous failure, there is no mainstream product that can just be an interactive chatbot, so you can enjoy the blue skies rather than be stuck on content-filled blue screens.

After all, a wearable assistant only disturbs when prompted, unlike the constantly buzzing and addictive smartphone.

As a digital minimalist, I try a lot to use my devices sparingly and intentionally, as many of you might do too. Separating digital tools is one way to do that.

But until OpenAI and Jony Ive release their AI wearables, all chatbots are currently stuck to the mobile and desktop, where Big Tech’s dominance lies.

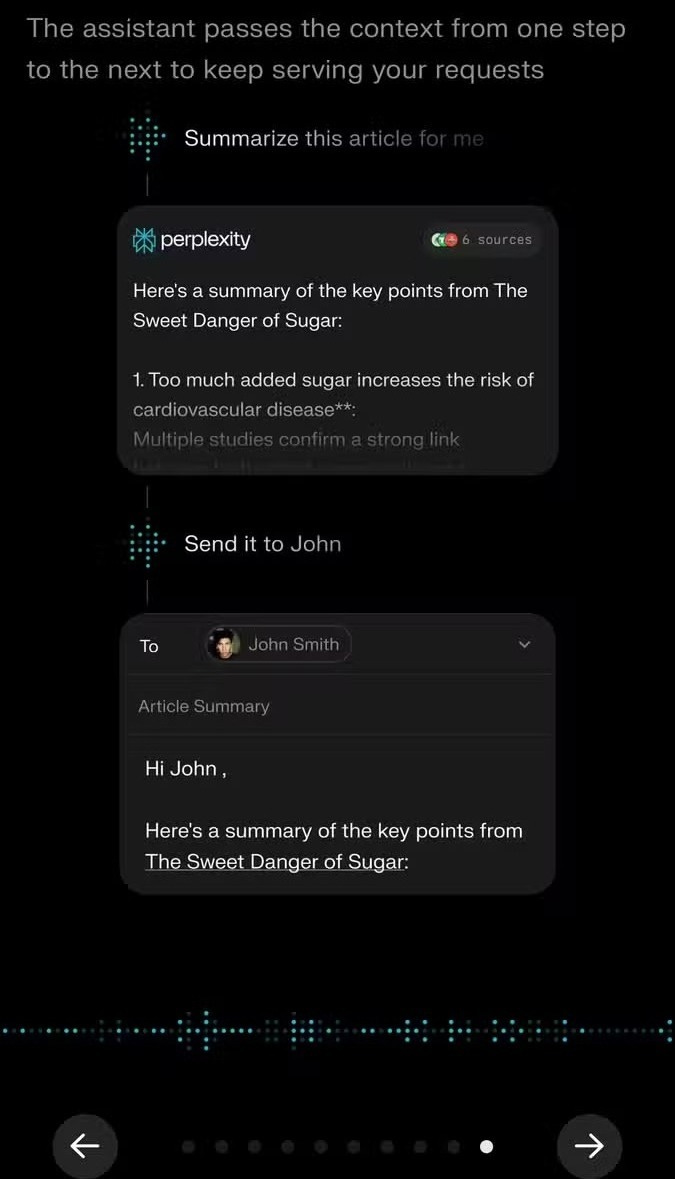

Doing Simple Tasks On-Device (Agentic AI)

If AI chatbots have to be present on existing mobile devices, their presence can at least be made more meaningful. For example, allowing them to perform some of the boring tasks on the user’s behalf.

Machines are meant to replace humans in repetitive tasks. Knowing this, its a wonder why such a seemingly perfect use case of LLMs isn’t yet implemented in the consumer space.

Yes. Services like ChatGPT Agent do exist, helping in things like planning a vacation. However, they are only meant to be a web agent or for vibecoding, not general on-device tasks.

AI assistants (the predecessors to chatbots) can already control many aspects of devices like sending a tweet or showing you specific pictures from your gallery.

Google Assistant and Samsung Bixby were particularly adept at this.

That means modern OS have the necessary APIs in place to allow an app to do such activities.

Credit where due, these newer chatbots do try to mimic the features.

But device control and access on ChatGPT or Perplexity seem more like an afterthought— they fall flat quickly. And again, it’s an access problem, not a technical one.

That’s unfortunate because there are so many simple tasks the chatbot apps can do on the phone and the desktop when given the correct access.

And unlike the “dumber” assistants, LLM chatbots could handle much more complex commands and queries.

Suppose you are in a meeting and want to set up DND, so you give this command—

“hey I am in a meeting. let no one except my mom disturb me. To all other contacts who message me, say ‘In a meeting’ for the next hour”

This will help you not fiddle with your phone and actually focus.

Any chatbot would understand this command just fine. It just won’t be able to take action on it and instead give variations of “I do not have access to your contacts and messaging apps” as replies.

Or, take file management. Commands like—

“Copy all my last month’s documents into the device I just mounted and eject it” or “select just the media in this folder and copy to a different folder”

Whole not groundbreaking, these may save a few seconds or clicks.

In the long run, even such small gains can add up, speeding up workflows and freeing up the user’s mental resources.

None of the companies seems focused on this type of integration yet. Not even Big Tech companies like Google or Amazon, which were historically all in on the smart home future. All that’s there are some vague claims from Microsoft.

It is understandable, though frustrating, to see why.

What if, in our earlier situation, instead of messaging just the people who text during your meeting time, it texts all your contacts the words “in a meeting”?

Everyone will receive that message. From your college buddies you last saw 20 years ago to your 70-year-old granny.

Or what happened with Replit, who despite multiple explicit instructions not to, deleted a company’s entire database.

Any command wrongly understood may lead to unintended actions and can easily prove disastrous.

Because of hallucinations and bugs, most users rightly feel uncomfortable giving such access to hallucinating and buggy chatbots.

The point here is not to convince these people. It is to give a hint to companies that agentic mode on a smaller level can be a reality—

Instead of giving full file access, maybe start with specific folders.

For people into customisation, dragging and dropping widgets and icons is a pain. A command like “Hey, just drop the Calendar widget on the top of my home screen” has little chance of going wrong and can still save time and effort.

A little goes a long way.

Respond Unprompted With Updates (Active AI)

The days of ChatGPT being restricted to October 2021 seem like a bygone era now that GPT-5 has launched.

All major AI chatbots now fully access the internet to give you (atleast what they feel) up-to-date answers.

But chats still feel one-sided.

AI could never take full advantage of the power of the internet unless it can be programmed to reply back when it finds updated information.

Only Meta AI seems to be working on such a feature with its Project Omni. Admittedly, it is intended more for user retention and engaging conversations.

Unprompted follow-ups can have more functional uses too.

Suppose you are researching on Pope Francis. During this time, His Holiness passed away. Such a heads-up will very likely be relevant to you right? Even the AI might agree. It is, however, programmed to only inform you when prompted.

Unprompted replies, provided not persistent like a drunk ex, can really position the chatbot as “more human”. If it genuinely follows up on chats, it can appear to be “caring”. Dare I say, not unlike a real human.

The models being updated also provide an opportunity to rewrite chat answers generated with older models.

Of course, keeping in mind the massive resources AI is taking right now, and user sanity, this should only be done for relevant chats.

You won’t want updated answers to a homework problem for a course you have already graduated from, would you?

Respond to a group of people (Conversational AI)

Voice Mode on ChatGPT blew away people around me. We all had little talking sessions whenever we got time, with people taking turns asking the model wacky questions.

Provided it was correct, it was a generation apart from older voice assistants.

During one of the talking sessions though, I realised something— The bot was responding the same way to the group of people present there. It did not remember what they asked individually or behave any differently with them.

It wasn’t a problem then, but it could be in other circumstances.

One of the times a person feels most human is when talking among a group.

The efforts required to remember and respond to different people may just be one of the best benchmarks of intelligence.

And here, technology has enabled a machine to mimic this behavior— Google Assistant and Alexa both have voice recognition and household profiles where they could respond to different members of the family.

Talking to an AI chatbot will significantly level up when it can also converse with your family and friends.

Of course without guardrails, it might unapologetically include any secrets you told in confidence, which will be embarrassing. But that’s more a training challenge than a technical one.

Having a family pricing plan may also be incredibly lucrative for OpenAI and others. So if you see any announcement of such a feature, don’t be surprised.

Shouldn’t AI be a Good Assistant First?

The following types of AI were discussed here—

Contextual AI (Having an advanced memory to connect the dots inter-chat)

Accessible AI (Being always available)

Agentic AI (Doing simple tasks on user’s behalf)

Active AI (Reply unprompted)

Conversational AI (Conversing with different people differently)

There can be many more types based on other use cases. But what all these have in common is that they assist the user. They don’t perform the primary work.

The point to drive home is that the models don’t need any new technical upgrades for any of these to work. They need deeper integration or simply borrowing from existing AI assistants like Google Assistant or Amazon Alexa.

Where development efforts are actually focusing right now is targeting primary work instead. Activities like—

Content Generation

Coding

Deep Research

All of these require much more “intelligence” from the models to actually deliver. Instead of working to replace humans, AI should first be able to be an assistant to them. After all, that’s how most people in the real world enter the workforce right?